Corpus research methods

There are different well-established CL methods to research language usage through the examination of naturally occurring data. These methods stress the importance of frequency and repetition across texts and corpora to create saliency. These methods can be grouped in four categories:

–Analysis of keywords. These are words that are unusually frequent in corpus A when compared with corpus B. This is a Quantitative method that examines the probability to find/not to find a set of words in a given corpus against a reference corpus. This method is said to reduce both researchers´ bias in content analysis and cherry-picking in grounded theory.

–Analysis of collocations. Collocations are words found within a given span (-/+ n words to the left and right) of a node word. This analysis is based on statistical tests that examine the probability to find a word within a specific lexical context in a given corpus. There are different collocation strength measures and a variety of approaches to collocation analysis (Gries, 2013). A collocational profile of a word, or a string of words, provides a deeper understanding of the meaning of a word and its contexts of use.

–Colligation analysis. This involves the analysis of the syntagmatic patterns where words, and string of words, tend to co-occur with other words (Hoey, 2005). Patterning stresses the relationship between a lexical item and a grammatical context, a syntactic function (i.e. postmodifiers in noun phrases) and its position in the phrase or in the clause. Potentially, every word presents distinctive local colligation analysis. Word Sketches have become a widely used way to examine patterns in corpora.

–N-grams. N-gram analysis relies on a bottom-up computational approach where strings of words (although other items such as part of speech tags are perfectly possible) are grouped in clusters of 2,3,4,5 or 6 words and their frequency is examined. Previous research on n-grams shows that different domains (topics, themes) and registers (genres) offer different preferences in terms of the n-grams most frequently used by expert users.

Why use corpora and corpus-related tools in the language classroom?

Quote as:

Pérez-Paredes, P., & Bedmar, B. D. (2009). Language corpora and the language classroom. Materiales de formación del profesorado de lengua extranjera, CARM, Murcia, pp. 1-48.

In corpus-inspired pedagogy, “rules are not restrictive, they are not “do not” rules”; they are “try this one” rules where you can hardly go wrong. There is an open-ended range of possibilities and you can try your skill [..] trying to say what you want to say” (Sinclair 1991: 493).

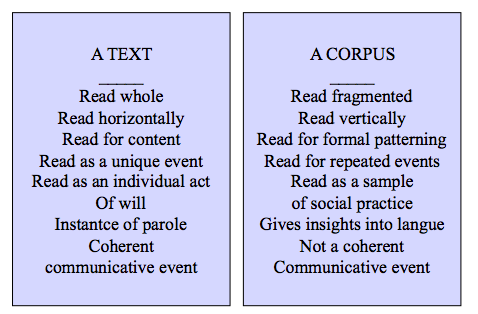

Concordancing can highlight patterns of repetition and variation in text, thus favouring the analysis of large specific schemata into smaller and more general ones, and/or the synthesis of small general schemata starting from larger more specific ones.

Analysis and synthesis leading to “knowledge restructuring”.

Corpus linguistics and the comparative approach:

Determining whether a feature is frequent or rare requires a comparative approach: An analyst cannot legitimately claim that a feature is frequent in a register until she has observed the less frequent use of that same feature in other registers. But this also requires a quantitative approach: An analyst cannot legitimately claim that a feature is more frequent in one register than another unless he has counted the occurrences of the feature in each register. Since most people notice unusual characteristics more than common ones, simply relying on what you notice in a register is not a reliable way to identify register features. (Biber & Conrad, 2009: 56).

Insights

Randi Rappen on the use of corpora in language education

Prescriptivism vs. descriptive linguistics

Corpus tools repositories

Word lists

Exploring contexts of AWL (dictionary-based)

Test the AWL in your own papers or highlight them.

Online DBs

Collins Cobuild Grammar patterns

Exploration tools

Versa Text information: word cloud, concorndacer and profiler

Text Analyzer: rate the difficulty level of a text according to the Common European Framework.

Webcorp (The web is your corpus)

Taporware tools (Alberta)

Concordancers

Antconc (Win, MacOS, lINUX)

Collocate 1.0 for windows (Michael Barlow)

Sketch Engine how to videos (new interface 2018)

Deconstructing discourse

Generate word lists (Input url)

Web as a corpus (n-gram browser)

Microsoft n-gram tool (just for fun and interesting lists of most frequent 100k words based on bing data mining)

Online corpora

Academic words in American English (Mark Davies COCA)

CRA (Corpus of Research Articles)

British Academic Written English Corpus (BAWE) Sketch engine gateway

Do-it-yourself tools

Advanced users

Beautifulsoup parser (Python)